Running large language models locally has become increasingly popular among security professionals and developers who need privacy-focused AI solutions. This guide explores on how to install LLM on Kali Linux through Ollama. It provides a powerful, secure environment for AI experimentation whilst maintaining complete control over your data. We will walk you through the entire process of setting up Ollama and running sophisticated large language models directly on your Kali Linux system.

Why install LLM on Kali Linux?

Installing LLM on Kali Linux offers significant advantages for hackers, cybersecurity professionals and privacy-conscious users. Unlike cloud-based AI services, local installations ensure that all your queries, conversations, and data remain entirely on your machine. This approach eliminates concerns about data privacy, provides offline functionality, and offers unlimited usage without subscription fees or API costs.

Kali Linux’s robust security-focused environment makes it an ideal platform for AI experimentation. When you install LLM on Kali Linux using Ollama, you’re creating a completely self-contained AI environment that can assist with security research, code analysis, and documentation tasks without ever transmitting sensitive information over the internet.

System Requirements to install LLM on Kali Linux

Before you install LLM on Kali Linux, ensure your system meets the minimum requirements. You’ll need at least 8GB of RAM for smaller models, though 16GB or more is recommended for optimal performance. Additionally, ensure you have sufficient storage space—larger models can require 15-20GB or more. A modern processor with multiple cores will significantly improve response times when running language models.

The beauty of Ollama is its compatibility with various hardware configurations. Whether you’re running Kali Linux on a high-end workstation or a modest laptop, you can find suitable models that balance performance with resource consumption.

Supported System Architecture

Ollama provides universal compatibility when you install LLM on Kali Linux, supporting both x86/x64 and ARM architectures seamlessly. The installation commands and procedures outlined in this guide work identically across desktop systems, laptops, and ARM devices like Raspberry Pi. This cross-platform compatibility ensures consistent performance whether you’re deploying on high-end workstations or portable penetration testing devices.

Step 1: Installing Ollama on Kali Linux

To install LLM on Kali Linux, we’ll use Ollama, an open-source tool designed specifically for running large language models locally. Begin by opening a terminal window in your Kali Linux environment. You can access the terminal through the applications menu or by using the keyboard shortcut Ctrl + Alt + T.

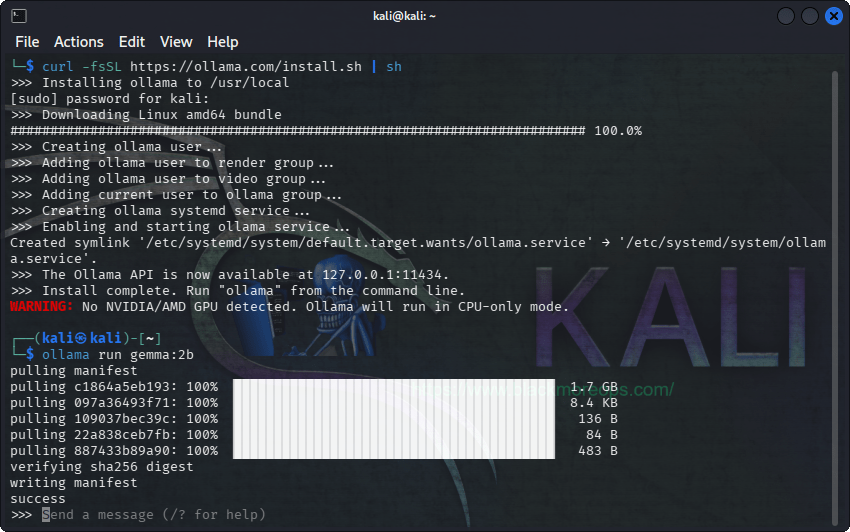

Execute the following command to download and install Ollama:

curl -fsSL https://ollama.com/install.sh | sh

This installation script will automatically configure Ollama on your Kali Linux system. The process may take several minutes depending on your internet connection. Once complete, the terminal prompt will return, indicating that Ollama is successfully installed and ready to use.

Download Ollama and then install LLM on Kali Linux. We’re used gemma:2b here which is a small LLM for testing

Step 2: Choosing and Running Your First Language Model

After you install LLM on Kali Linux through Ollama, the next step involves selecting an appropriate model. Ollama supports numerous models with varying capabilities and resource requirements. For beginners, we recommend starting with smaller models like Gemma:2B or Phi-3 Mini, which require less system resources whilst still providing impressive AI capabilities.

To download and run a model, use the following command structure:

ollama run [model-name]

For example, to run the Gemma 2B model:

ollama run gemma:2b

The first time you run a model, Ollama will download it automatically. Progress bars will indicate the download status. Once downloaded, you’ll see a prompt where you can begin interacting with the AI model directly through the terminal.

Available Models and Their Specifications

When you install LLM on Kali Linux using Ollama, you gain access to an extensive library of models with varying capabilities and resource requirements. The number of parameters in a model typically correlates with its sophistication and accuracy, though larger models require more system resources and storage space.

Understanding model sizes helps you choose the right balance between performance and resource consumption for your Kali Linux system. Here’s a comprehensive breakdown of available models:

| Model Name | Parameters | Size (GB) | Best Use Cases | Rating for Speed |

|---|---|---|---|---|

| oLLama-7B | 7 billion | 13 | General-purpose tasks, balanced performance | ⭐⭐⭐ |

| oLLama-3B | 3 billion | 6 | Lightweight general tasks, resource-constrained systems | ⭐⭐⭐⭐ |

| oLLama-1B | 1 billion | 2 | Basic tasks, minimal resource usage | ⭐⭐⭐⭐ |

| oLLama-500M | 500 million | 1 | Ultra-lightweight, testing purposes | ⭐⭐⭐ |

| oLLama-300M | 300 million | 0.6 | Experimental, very limited capabilities | ⭐⭐ |

| Llama2-7B | 7 billion | 13 | Advanced reasoning, complex conversations | ⭐⭐ |

| Llama2-13B | 13 billion | 26 | Professional applications, detailed analysis | ⭐ |

| Phi-3 Mini | 3 billion | 3.8 | Microsoft’s efficient coding assistant | ⭐⭐ |

| Phi-3 Medium | 14 billion | 15 | Enhanced reasoning, professional development | ⭐ |

| Orca Mini | 7 billion | 13 | Instruction-following, task completion | ⭐⭐⭐ |

| Solar | 10.7 billion | 6.1-21 | Korean-English bilingual capabilities | ⭐⭐ |

| Gemma-2B | 2 billion | 3.5 | Google’s efficient general-purpose model | ⭐⭐⭐⭐⭐ |

| Gemma-7B | 7 billion | 11.5 | Enhanced version with better reasoning | ⭐⭐⭐ |

| LLaVA-7B | 7 billion | 5.5 | Image analysis and visual understanding | ⭐⭐ |

| LLaVA-13B | 13 billion | 17 | Advanced multimodal capabilities | ⭐ |

| StarCoder-7B | 7 billion | 15 | Code generation and programming assistance | ⭐⭐ |

| CodeLlama-7B | 7 billion | 13 | Meta’s specialised coding model | ⭐⭐ |

| Dolphin-2.2-70B | 70 billion | 28 | Advanced reasoning, research applications | ⭐ |

| Magicoder-7B | 7 billion | 10.5 | Enhanced code understanding and generation | ⭐⭐ |

You may also like

Choosing the Right Model for Your Kali Linux System

When you install LLM on Kali Linux, selecting the appropriate model depends on your system specifications and intended use:

For systems with 8GB RAM or less: Start with smaller models like Gemma-2B, Phi-3 Mini, or oLLama-1B. These models provide impressive capabilities whilst maintaining reasonable resource consumption.

For systems with 16GB RAM: Consider mid-range models like Llama2-7B, CodeLlama-7B, or LLaVA-7B. These offer excellent performance for most professional applications.

For high-end systems with 32GB+ RAM: Explore larger models like Llama2-13B, Phi-3 Medium, or even Dolphin-2.2-70B for research and advanced applications.

Security professionals working on Kali Linux might particularly benefit from CodeLlama or StarCoder models for vulnerability analysis, whilst those requiring image analysis capabilities should consider the LLaVA variants.

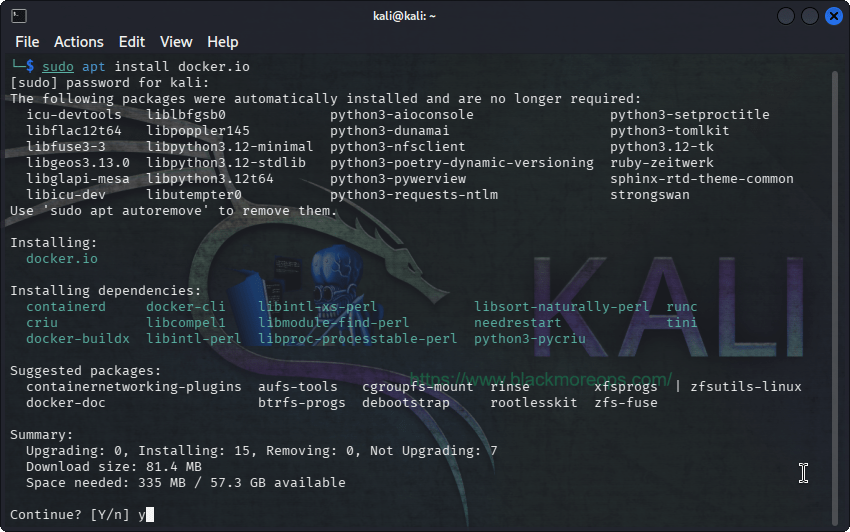

Setting Up the Web Interface

Whilst the terminal interface works perfectly for basic interactions, installing a web interface significantly enhances the user experience when you install LLM on Kali Linux. The Open WebUI provides a ChatGPT-like interface that’s more intuitive for extended conversations and complex tasks.

First, install Docker on your Kali Linux system:

sudo apt install docker.io

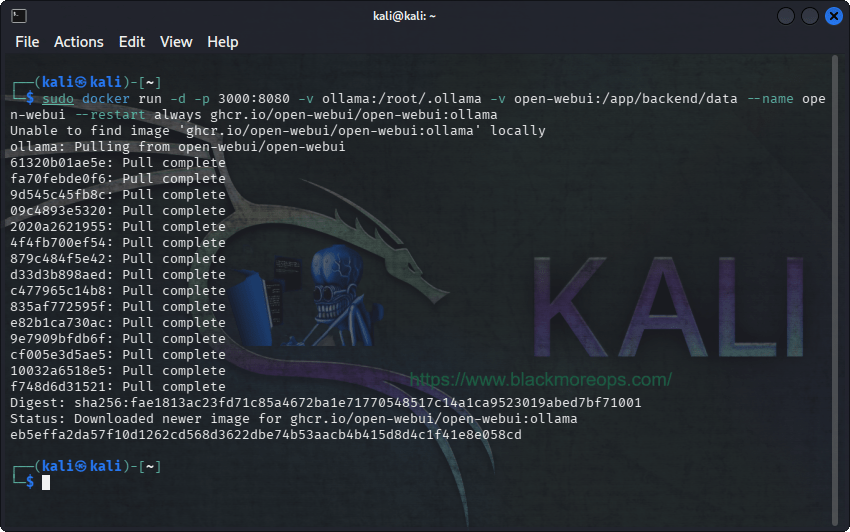

Once Docker is installed, deploy the Open WebUI using this command:

sudo docker run -d -p 3000:8080 -v ollama:/root/.ollama -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:ollama

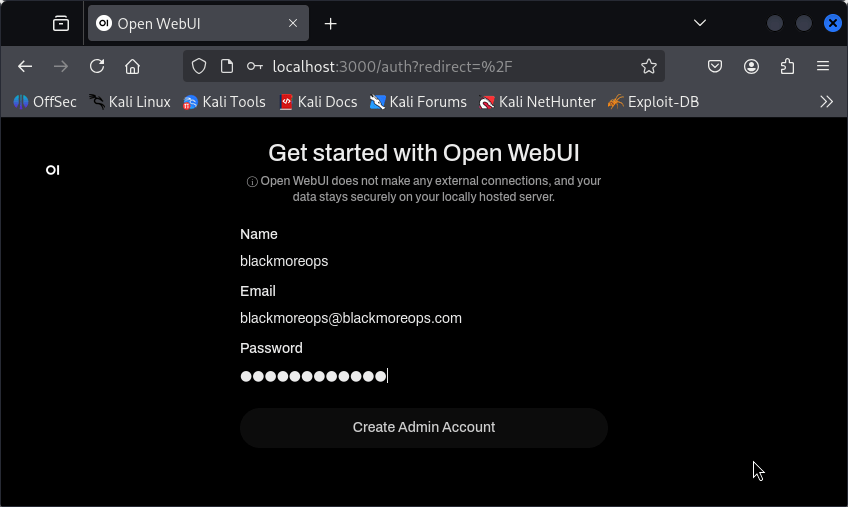

After the container is running, access the web interface by navigating to http://localhost:3000 in your web browser. You’ll need to create a local account using any email address—this information stays entirely on your system and is only used for local authentication.

Advanced Features and Image Analysis

One of the most impressive capabilities available when you install LLM on Kali Linux is image analysis through vision-enabled models like LLaVA. These models can describe images, extract text, analyse screenshots, and even assist with visual cybersecurity tasks.

To use image analysis features, download a vision model:

ollama run llava:latest

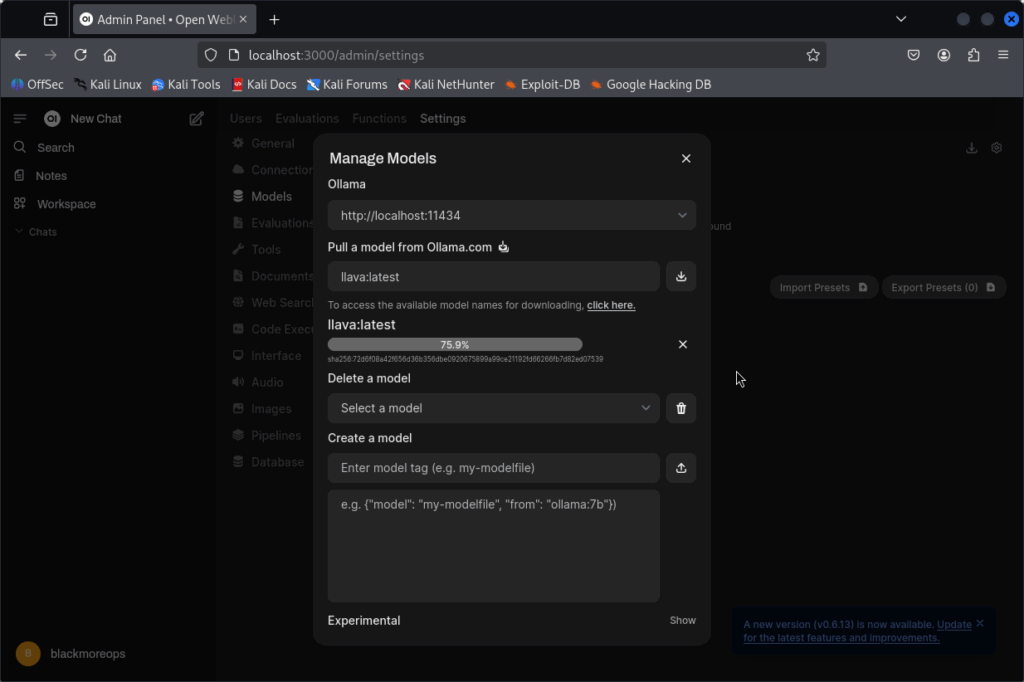

I found that if you download via the WebGUI then it’s easier to use and little bit quicker for me, not sure of that’s just me.

You go to User Icon > Admin Panel > Settings > Models > Pull a model from Ollama.com and paste llava:latest in there.

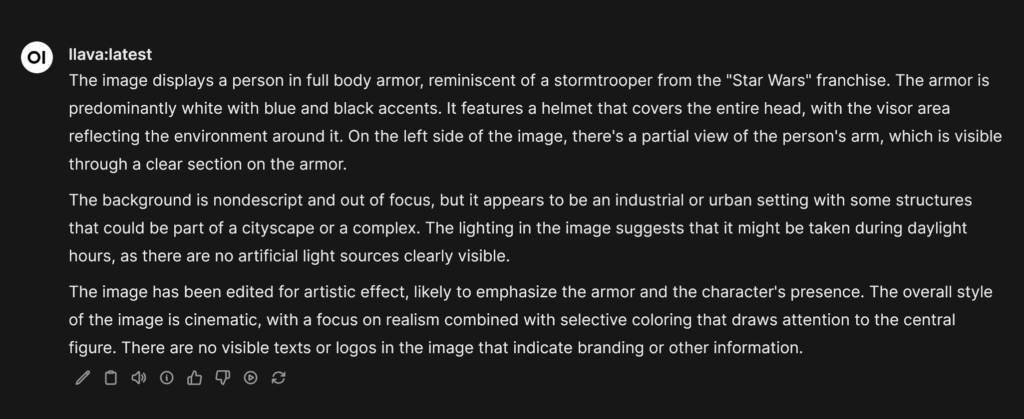

Through the web interface, you can upload images and ask the AI to analyse them, describe contents, or extract information. This functionality proves particularly valuable for security professionals analysing suspicious files, screenshots, or network diagrams. I’ve uploaded a Stormstooper image and asked it to describe it.

The output will look something like below: quite extensive. LLaVa LLM can be very chatty at time and give you a large dump of output.

Optimising Performance and Troubleshooting

After you install LLM on Kali Linux, optimising performance ensures the best possible experience. Monitor system resources during model usage and consider closing unnecessary applications to free up RAM. If responses seem slow, try switching to a smaller model or ensure no other resource-intensive processes are running simultaneously.

For troubleshooting common issues, verify that Ollama is running correctly with ollama list to see installed models.

If the web interface doesn’t load, check that Docker is running with sudo systemctl status docker. Most installation issues resolve by ensuring adequate system resources and stable internet connectivity during initial model downloads.

Security Considerations and Best Practices

When you install LLM on Kali Linux or WSL, you’re creating a powerful tool that requires responsible usage. Always verify that models are downloaded from official sources to avoid potential security risks. Keep Ollama updated to the latest version to benefit from security patches and performance improvements.

Consider the ethical implications of AI usage, particularly in security contexts. Whilst these tools can assist with legitimate security research and educational purposes, ensure your usage complies with applicable laws and ethical guidelines. The privacy benefits of local AI installation come with the responsibility of using these capabilities appropriately.

Expanding Your AI Toolkit

Successfully installing LLM on Kali Linux opens numerous possibilities for enhancing your workflow. Consider experimenting with different models to find those best suited to your specific needs. Explore prompt engineering techniques to maximise the effectiveness of your AI interactions, and investigate integration possibilities with your existing security tools and workflows.

Your local AI installation provides a foundation for advanced projects, from automated security report generation to intelligent log analysis. As you become more comfortable with the technology, consider developing custom scripts and integrations that leverage these powerful language models to enhance your security research and development activities.

Based on all the tests, I would suggest

Thats’s it guys, enjoy.